Category: Academic

-

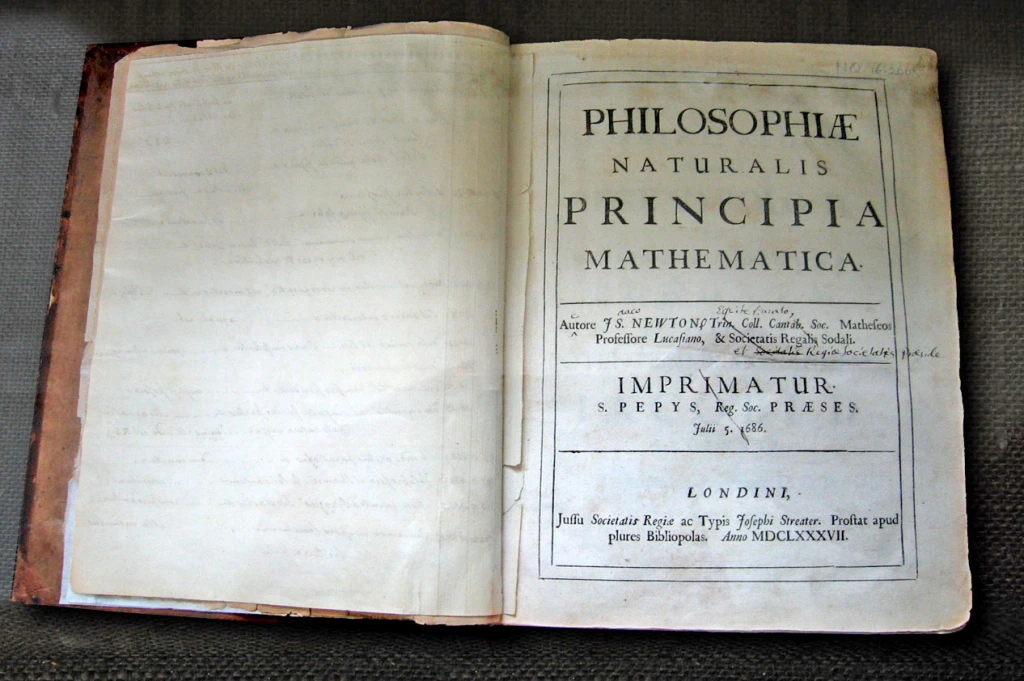

Solving the Three Body Problem: Isaac Newton’s Role in the Rise of Eighteenth-Century Celestial Mechanics

The three-body problem, which discusses the gravitational relationship between three independent objects, has remained one of the most intriguing unsolved mysteries in physics. Kat Jivkova analyze Isaac Newton’s approach to the three-body problem through his seminal work.

-

Positioned by Strings from Above? – Evaluating Historic Belief in an Orchestrator Controlling Society

Harry Fry explores the concept of a higher power orchestrating human activity, examining it from religious, conspiracy theory, political ideology, psychological, and technological perspectives.

-

The Hausmann Reconstruction: How did Urban Growth in Paris Change the Social and Political Visibility of Women in the Second Half of the Nineteenth Century?

The second half of the nineteenth century saw significant changes in Europe’s urban environment, occurring against the backdrop of political upheaval following the revolutionary waves of 1848. Nancy Britten looks at the impact of urban growth in Paris on women, socially and politically.

-

Medicine as Autonomy: An Analysis of Enslaved Africans in Seventeenth Century Barbados and Jamaica

Between the years 1440 and 1720, two million enslaved Africans were forcefully shipped to the Americas. During this time the practice of medicine played a critical role in the survival and wellbeing of these slaves, but most importantly, provided them with agency. Nadja Dixon examines this medical expertise.

-

The Role of Sinhala Nationalism in Political Conflict and Violence in Sri Lanka

The complexities of Sri Lanka’s socio-political landscape have been deeply influenced by the ideology of Sinhala nationalism, which espouses belief in the ethnic and religious superiority of the Sinhalese majority. Louisa Steijger examines its violent impacts.

-

Buildings That Mean Death: Israeli Settlements in Palestine

Over the last century, Israeli settlements have increasingly moved onto Palestinian land, often in violation of international treaties. Aliya Okamoto Abdullaeva examines the history and present context of this.

-

Newsreel Narratives: Media Influence and Manipulation in the 1956 Suez Crisis

The 1956 Suez Crisis was heavily covered by the media in both France and Britain. Edie Christian examines how this media coverage was used by the government to justify their actions.

-

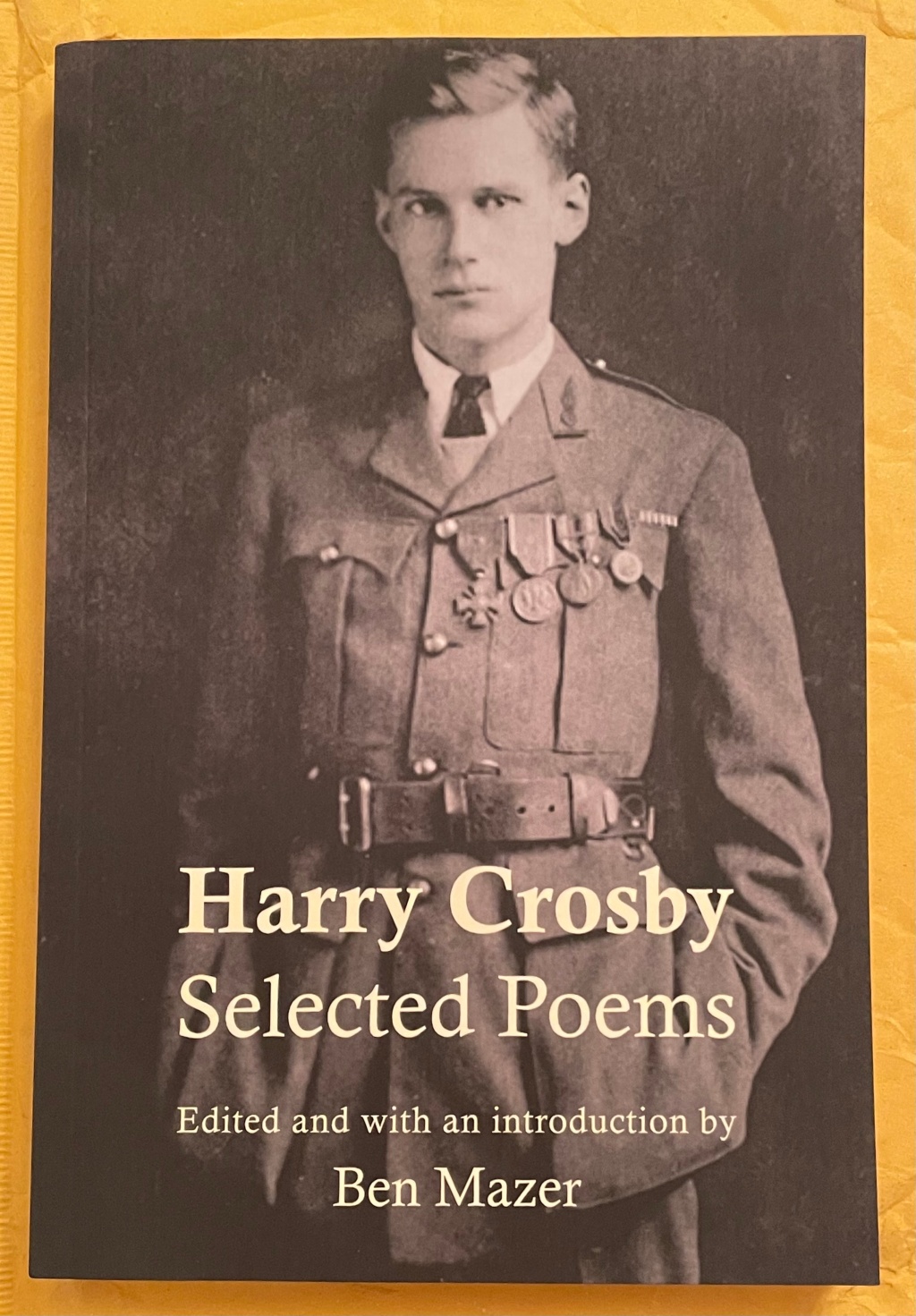

The Oeuvre of Harry Crosby: Art into Reality

Harry Fry delves into the life and work of Harry Crosby, a poet who lived in the early 20th century.